2024-August

Substack

“This incident shows clearly that Windows must prioritize change and innovation in the area of end-to-end resilience. […] Examples of innovation include the recently announced VBS enclaves, which provide an isolated compute environment that does not require kernel mode drivers to be tamper resistant.” - John Cable, Microsoft VP of Program management

― Microsoft: Azure Slowdown - App Economy Insights [Link]

Microsoft Azure decelerated by 1 point sequentially to 30% YoY, while Google Cloud accelerated.

Recent business highlights: 1) global IT outage caused by a faulty update by CrowdStrike affected 8.5 M Windows PCs, 2) Microsoft facing investigation by UK’s CMA over hiring former Inflection AI Staff and the partnership with the startup.

According to Nielsen, Prime Video captured 3.1% of US TV Time in June (a decline of 0.1 points Y/Y). Prime Video captures just over a third of Netflix’s market share (and more than Disney+ and Paramount+ combined).

As Amazon continues to invest in live sports and expand its content catalog, Prime members may find themselves spending more time with the service they already pay for. Prime Video may have started as a loss leader, but if it can become the go-to streaming platform for ad-supported content, it could evolve into a significant revenue driver, even for a behemoth like Amazon.

― Amazon: This Team is Cooking - App Economy Insights [Link]

- Portfolio rebalancing: Apple stock surged 24% between May 1st and June 30th. As a result, Buffett would have seen AAPL take up nearly 60% of Berkshire’s portfolio. A stake reduction is a typical move to rebalance a portfolio and lower its risk profile.

- Valuation: Apple is valued above 30 times forward earnings. That makes it less likely to deliver alpha for shareholders. It’s possible Buffett felt like the odds of market-beating returns at this level were subpar.

- Taxes matter: Buffett told shareholders in May that he finds the current tax rate on capital gains relatively low, potentially prompting him to realize his significant AAPL gains while the rate is reasonable.

- No place to hide: Buffett is building up his cash pile and waiting for a “fat pitch.”

The Buffett Indicator:* This ratio compares the total market capitalization of US stocks to the country’s GDP. It’s often used to gauge whether stock valuations in the US are overinflated. It reached 138% during the dot-com bubble, which was considered high at the time. Low and behold, the indicator hit 190% at the end of June.*

― Berkshire Slashes Apple Stake - App Economy Insights [Link]

Factors of today’s macro environment: 1) The AI Bubble, 2) The Yen Carry Trade, 3) Potential Recession.

Llama 3.1’s Impact on China, Kuaishou’s AI Video Generator Goes Global, and Alibaba Backs $2.8B AI Firm - Recode China AI [Link]

Highlights key AI news in China: 1) Kuaishou’s global launch of its AI video generator, Kling AI, and Zhipu AI’s introduction of Ying, show China’s progress in AI video generation. 2) Alibaba, Tencent, and state-backed AI funds poured $690 M into the $2.8 B AI firm Baichuan AI.

State of AI in Venture Capital 2024 - AI Supremacy [Link]

“AI CapEx” is a euphemism for building physical data centers with land, power, steel and industrial capacity. There’s been a lot of investment in data centers and AI chips, but not AGI in sight. You can buy all the shovels you want, but if the mine ain’t making money, we have a problem. If there’s no gold in the mine, the shovels aren’t worth very much. BigTech hyperscalers and VCs might have gotten this all wrong.

― OpenAI’s SearchGPT and the Impossible Promises of AI - AI Supremacy [Link]

This article points out that industry is facing immense financial pressures and strategic uncertainties. The concerns are as follows: 1) OpenAI’s operating costs exceed $ 8 B, with a projected loss of $5 B in 2024, 2) annual AI revenue to justify the investment in data centers and chips is unlikely to be achieved by 2025, 3) integrating SearchGPT into ChatGPT is a risky bet because users don’t use ChatGPT frequently enough for it to be a successful search tool, 4) competitive market has pushed many AI startups out of the market, AI innovation cannot compete with market dominance (e.g. Microsoft’s attempts to integrate AI into Bing), 5) Big tech companies have accepted that they are possibly over-investing in AI due to FOMO (fear of missing out), leading to unsustainable financial practices, 6) Nvidia’s revenue is risky since it comes majorly from a few tech giants.

The Morningstar framework: The framework is built on 5 “moat sources”:

- Intangible assets (Coca-Cola)

- Switching Costs (Oracle)

- Network Effects (CME Group)

- Cost Advantages (UPS)

- Efficient Scale (Kinder Morgan)

― 5 Wide Moat Businesses - Invest in Quality [Link]

Intangible assest: Coca-Cola, SANOFI, Unilever, Johnson & Johnson.

Switching Costs: Oracle, Intuitive Surgical, ADP.

Network Effect: Mastercard, eBay, CME Group, Facebook.

Cost Advantage: Amazon, Novo Nordisk.

Efficient Scale: UPS, nationalgrid, Carnival

AI: Are we in another dot-com bubble? - AI Musings by Mu [Link]

A comprehensive analysis comparing current AI cycle to the internet/telecom cycle of the 90s. The author examines the technological, economic, and capital differences between the two eras and concludes that while a bubble may be inevitable in the long run, we are still far from reaching that point.

Key points:

Similarities between AI cycle since Nov 2022 and internet cycle of the 90s: 1) Both cycles have similar ecosystem structures, with companies providing infrastructure, enablement, and applications. 2) Occur amid equity bull markets, driven by favorable economic conditions. 3) Require significant infrastructure investments. 4) Attract significant VC interest, leading to high valuations.

Differences between AI cycle since Nov 2022 and internet cycle of the 90s: 1) AI companies are generating revenue much earlier than dot-com companies did, with more sustainable business models. 2) The current economic environment is less robust than in the 90s, leading to a more cautious investment climate. 3) AI investments are primarily equity-funded by big tech, unlike the debt-financed dot-com boom. 4) Valuations of AI companies, while high, are more grounded in near-term earnings than those during the dot-com era.

Bubble Likelihood: The article argues that while there are risks, the current AI cycle is less likely to be in a bubble compared to the dot-com era. The more cautious investment environment, sustainable business models, and the structured flow of capital contribute to this conclusion.

Lessons from Dot-Com Bubble: 1) Infrastructure buildouts take time. 2) Being a first mover can be a disadvantage, as seen with early internet companies that were later overtaken by more successful competitors. 3) The importance of being critical and not getting swept up in the hype, learning from the past to navigate the present.

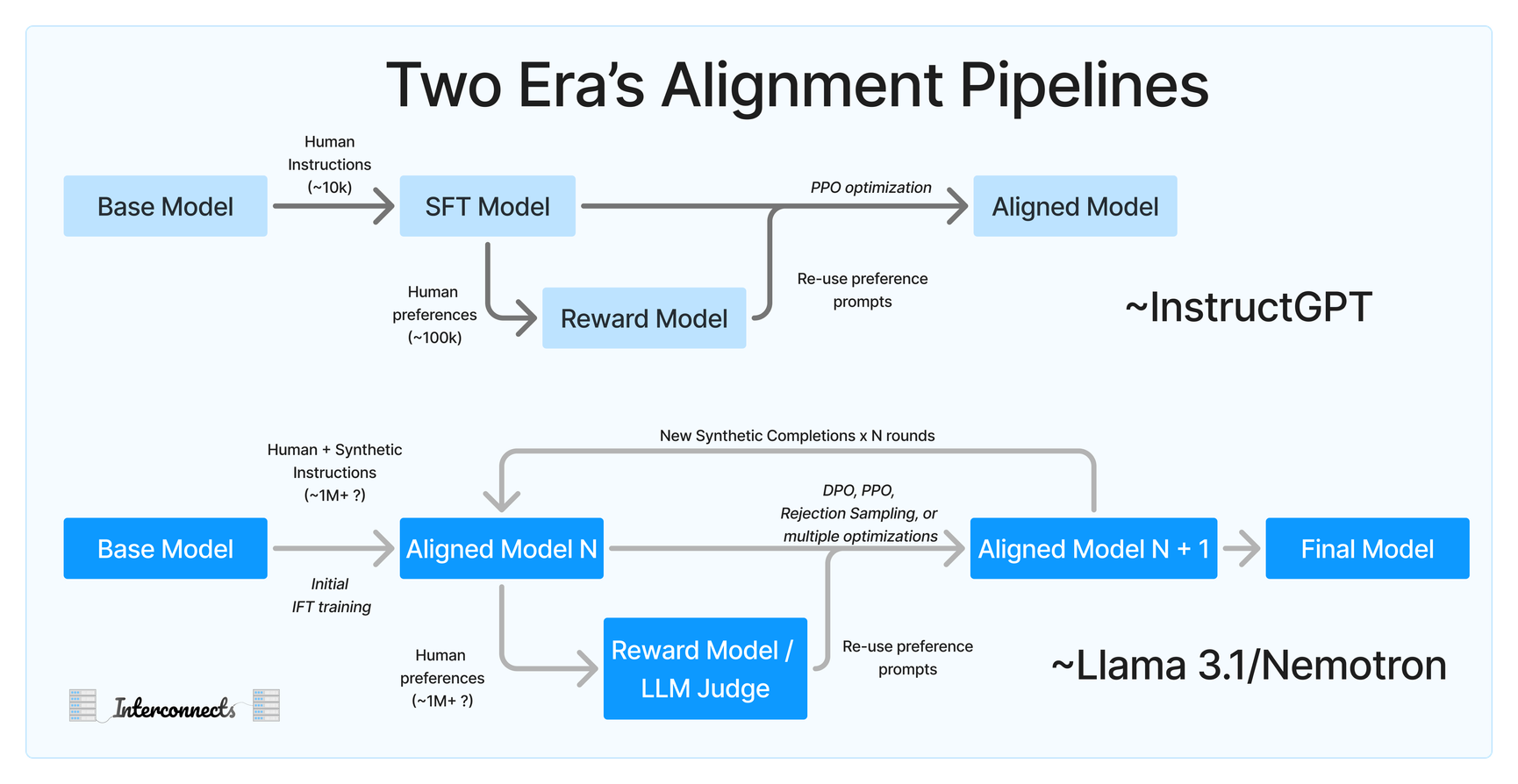

To recap the above post, they do the new normal, including:

- Human preference data and HelpSteer style grading of attributes for regularization.

- High-quality reward models for filtering.

- Replacement of human demonstrations with model completions in some domains.

- Multi-round RLHF — “We iterate data and model qualities jointly to improve them in a unified flywheel.”

- A very large suite of data curation techniques, including prompt re-writing and refining for expansion of costly datasets, filtering math and code answers with outcomes (correctness or execution), filtering with LLMs-as-a-judge, and other new normal stuff.

― A recipe for frontier model post-training - Nathan Lambert, Interconnects [Link]

Recent papers and reports (Llama 3.1, Nemotron 340B, and Apple foundation model) have made it clear that a new default recipe exists for high-quality RLHF. It has a few assumptions:

- Synthetic data can be of higher quality than humans, especially for demonstrations on challenging tasks.

- Reinforcement learning from human feedback (RLHF) can scale far further than instruction tuning.

- It takes multiple rounds of training and generation to reach your best model.

- Data filtering is the most important part of training.

It becomes clear that the post training is highly correlated with the style and robustness gains.

The new normal seems to be converged as follows:

OpenAI and Generative AI are at a Crossroads - AI Supremacy [Link]

Views of AI landscape.

At least five interesting things for your weekend (#45) - Noahpinion [Link]

GPT-5: Everything You Need to Know - The Algorithmic Bridge [Link]

New LLM Pre-training and Post-training Paradigms - Ahead of AI [Link]

You don’t have to be a manager - Elena’s Growth Scoop [Link]

Eric Schmidt’s AI prophecy: The next two years will shock you - Exponential View [Link]

Former Google CEO Eric Schmidt predicts rapid advancements in AI, where large language models and agent-based systems with text-to-action capabilities could converge, causing tremendous economic and technological disruption.

Meta: Better Sorry Than Safe - App Economy Insights [Link]

Judge Amit Mehta handed down the ruling, finding that the tech giant has been using its dominance in the search market to favor its own products and services, making it harder for rivals to gain a foothold.

The Justice Department had sued Alphabet over its multi-billion dollar deals with smartphone manufacturers and wireless providers to be the default search engine on mobile devices.

Based on the $87 billion in Services revenue for Apple in 2023, Alphabet accounted for 25% of Apple Services, and over 20% of the Apple’s net profit

― Google’s Antitrust Loss - App Economy Insights [Link]

Potential remedies are 1) ending exclusivity deals: prohibit Alphabet from securing exclusive agreements with partners like Apple, 2) behavioral remedies: restrict Alphabet’s conduct e.g. bundling some of its services together, 3) structural remedies: divest some of Alphabet’s businesses e.g. search advertising, YouTube, smartphone business.

A critical component of AMD’s recent strategy is to go beyond advanced chips and offer software (for developers to access the capabilities through applications) and now tailored system solutions (to optimize the data center for performance).

― AMD Acquires ZT Systems - App Economy Insights [Link]

In AMD presentation, they mentioned that 1) system design and enablement engineers in ZT systems will enable AMD to design world-class AI infrastructure delivered through an ecosystem of OEM and ODM partners, 2) ZT system’s extensive cloud solutions experience will help accelerate the deployment of AMD-powered AI infrastructure at scale with cloud customers, 3) AMD will deliver optimized solutions to market with our CPU, GPU, networking, and now systems solutions.

Uber’s continued growth and expanding margins today are encouraging.

- More users and more trips in mature markets.

- Strong advertising performance showing product-market fit.

- Multi-product adoption, improving churn, and lowering acquisition costs

― Uber’s Big Autonomy Plan - App Economy Insights [Link]

Kind of interested in how Uber’s long-term vision differs from that of Tesla or Waymo.

Business highlights: Autonomous vehicles (AV): the ongoing collaborations with 10 AV companies across all segments fueled the surge of Uber’s AV trip growth. AVs on Uber is a win-win because of Uber’s massive network.

Looking forward: 1) The advertising business reached a revenue run rate of over $1 billion, compared to $650 million a year ago, 2) Uber has made many strategic investments, representing over $6 billion in equity stakes today.

The Corporate Life Cycle: Managing, Valuation and Investing Implications - Musings on Markets [Link]

This article is a concise summary of Aswath Damodaran’s new book. There is also a YouTube course series.

52 Reasons to Fear that Technological Progress Is Reversing - The Honest Broker [Link]

Very good article summarizing concerning warnings happened recently. Warning signs that all of us have observed. For example, 1) people refuse to upgrade their operating system, probably because the risk started to overweight performance increase, 2) Scientific journals are now filled with thousands of fake AI-generated papers, very concerning, 3) education degree starts to have less value because the tuition now outweights perceived benefits, 4) Google overwhelms search results with affiliate-driven content, often of low quality. Its search results have become dominated by ads, thinly veiled as recommendations, and content designed to maximize affiliate commissions rather than genuinely help users, leading to a degraded user experience, 5) people are addicted to their phones, because tech companies have conducted comprehensive and rigorous research studies, finding way to design and improve products, so that people can stick to them.

(1) Instead of pursuing truth, new technologies aim to replace it with mimicry and fantasy.

(2) This has empowered shamming, scamming & spamming at unprecedented levels.

(3) Users are not the real customers—so billions of people must suffer to advance the interests of a tiny group of stakeholders.

(4) Real people become inputs in a profit-maximization scheme which requires that they are constantly controlled and manipulated.

(5) In this environment, everything gets viewed as a resource or input and the natural world (including us) is ruthlessly exploited.

(6) The groundwork for this was laid by theorists who replaced truth with power.

(7) In the past, governments controlled huge technologies (nuclear power, spaceships, etc.) so they were somewhat accountable to citizens, but now the most powerful new tech is in private hands, and the public good is no longer even considered.

(8) So much wealth is concentrated in the hands of the winners in these processes, that they literally become more powerful than nation states.

(9) With this shift in power, even the most independent politicians turn into controlled agents working for the technocracy — making a mockery of democracy.

(10) If you oppose this command-and-control tech you can be theoretically (and often literally) erased, suspended, deplatformed, shadow-banned, surveilled, de-banked, digitally faked, etc. —so who will dare?

― 10 Reasons Why Technological Progress Is Now Reversing - Ted Gioia [Link]

Good thinking and perspectives. We human are all adapting to a constantly changing life due to the advancement of technology. Ethical boundaries become blur. Truth and false are re-defined. We are farther away from nature and closer to artificiality. We are farther away from truth and closer to hallucination / scam. This is inevitable. The point is, how can we maximize the chance of correcting ourselves in the way of development.

“If you want to be a good evaluator of businesses,” said Buffett, “you really ought to figure out a way — without too much personal damage — to run a lousy business for a while. You’ll learn a whole lot more about business by actually struggling with a terrible business for a couple of years than you learn by getting into a very good one where the business itself is so good that you can’t mess it up.”

― The Lessons of a Lousy Business - Kingswell [Link]

This is about one of Warren Buffett stories — his investment in Dempster Mill Manufacturing Company. Three lessons:

Don’t throw good money after bad

Avoid the mistake of continuing to invest in something that is not working, hoping to recover the losses. Warren Buffett learned from his experience to avoid reinvesting in failing operations. Instead of pouring more money into trying to revive the company’s subpar operations, Buffett used the temporary profits from cost-cutting and tax advantages to invest in other, more promising ventures. This approach allowed him to build a successful business empire rather than wasting resources on a losing cause.

Look for .400 hitters

Warren Buffett prioritizes finding exceptional managers—like those who are as rare and skilled as a .400 hitter in baseball—when acquiring companies. By securing top-tier managers, Buffett can delegate the day-to-day operations with confidence, allowing him to focus on what he does best: allocating capital. This approach simplifies his role and ensures that the companies he acquires are in capable hands, which is a crucial aspect of his investment strategy.

Some things are worth more than money

This is the value Warren Buffett places on the impact of his business decisions on people and communities. Despite the financial losses from Berkshire Hathaway’s textile operations, Buffett kept them running for many years because they were a major source of employment for a struggling region. This decision reflects his consideration of the social and human aspects of business, prioritizing the well-being of the community over pure financial gain.

Pandemic Darlings That Never Bounced Back - Investment Talk [Link]

This article is a reminder that highly speculative situations rarely turn out well in the long run. Many of the speculative stocks that were supposedly going to take advantage of “permanent” societal changes in 2020 and 2021 collapsed and remain far below their highs.

My intuitive explanation is that business that is able to benefit from the sudden covid impact on society is not necessarily stable or invulnerable. It’s probably because the business only works in such abnormal situation where covid was widespread. But the thing is that situation won’t last long and people don’t enjoy it. Business that is sensitive to this societal change is testified by this change to show that normal society sticks to or prefers the business. And you can never underestimate this stickiness or preference, which is like a moat.

We have lots of data, but none of it means much until you attach a story to it about what you think it means and what you think people will do with it next. That seems obvious to me. But ask forecasters if they think the majority of what they do is storytelling and you’ll get blank stares. At best. It never seems like storytelling when you’re basing a forecast in data.

[History] cannot be interpreted without the aid of imagination and intuition. The sheer quantity of evidence is so overwhelming that selection is inevitable. Where there is selection there is art. Those who read history tend to look for what proves them right and confirms their personal opinions. They defend loyalties. They read with a purpose to affirm or to attack. They resist inconvenient truth since everyone wants to be on the side of the angels.

― A Number From Today and A Story About Tomorrow - Morgan Housel @ Collabfund [Link]

AI for Non-Techies: Top Tools for Search, Agent Building, Academic Paper Reviews & Sales Automation - AI Supremacy [Link]

China’s Humanoid Robots, Former Huawei Genius‘ Needle-Threading Robot, and Big Tech Reap AI Rewards - Tony Peng [Link]

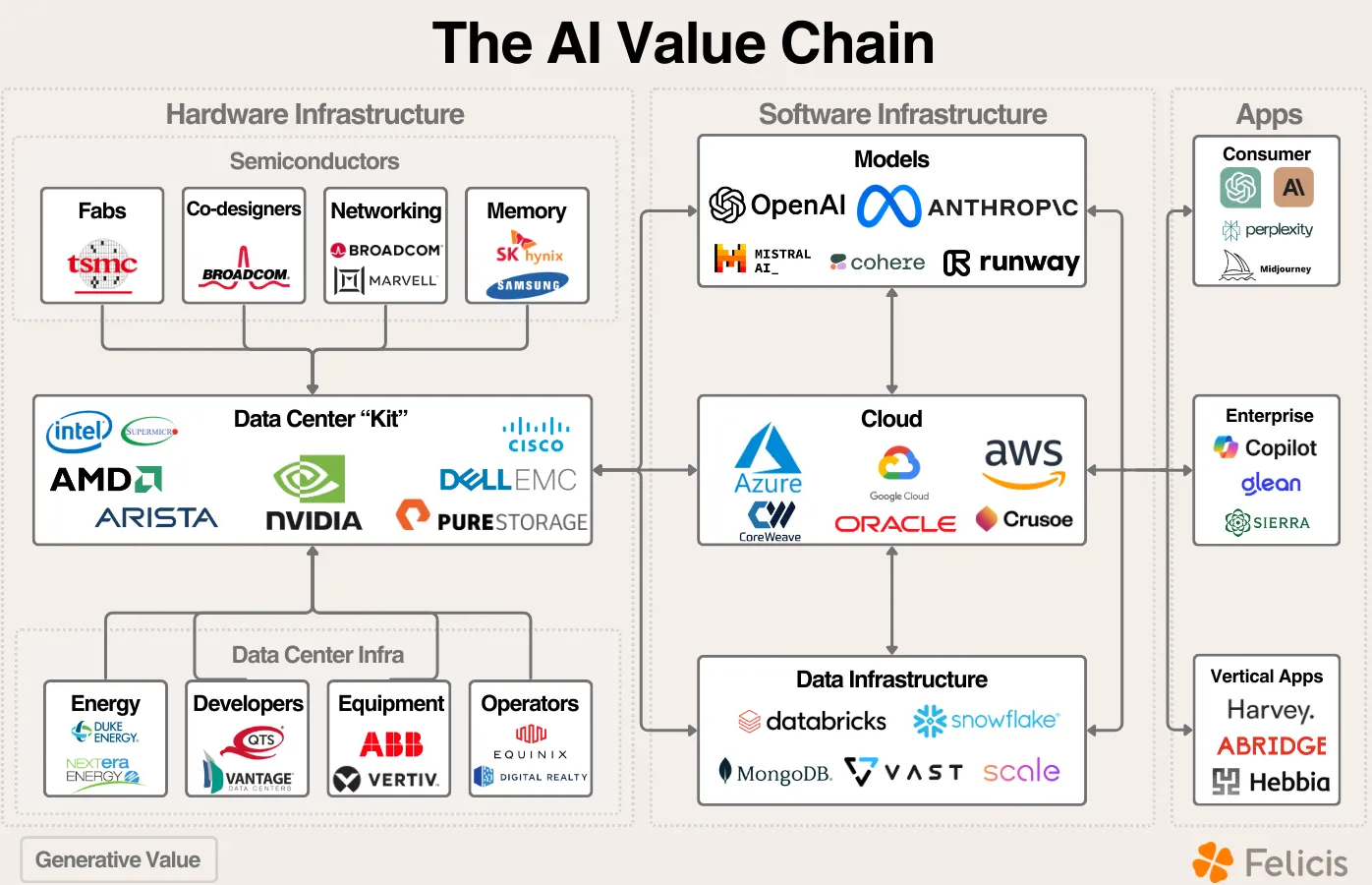

AI applications will ultimately determine the revenue created across the AI value chain. The primary question in AI is this: “What problems is AI solving? How large are the scale of those problems? What infrastructure needs to be in place to support those applications?”

― Ghost in the Machine: The AI Value Chain [Link]

It’s important to think about where value accrues along the AI Value Chain:

- New Grad or L3

- Stay hungry

- Listen to team meetings to identify areas.

- Ask your team leads where you can help.

- Self-nominate

- Review your team’s backlog and identify “nice to have” items.

- Come forward when someone is looking for assistance.

- Be curious

- Shadow a senior’s coding practices.

- Clarify tasks given to you and understand the context of the larger goals.

- Mid-level or L4

- Take ownership

- “How can others benefit from this work?”.

- Pick up anything dropped on the floor, don’t complain, and take it to the finish line.

- Assist your teammates

- Align with the next level

- Find projects to collaborate with key ICs in your organization.

- Become an expert on a specific area for your team.

- Senior or L5

- Delegate

- Create space for others to grow.

- Scale yourself by delegating work and grow your impact.

- Clear Communication

- Invest in mastering concise communication.

- ❌ “The functionality of the module should be enhanced to provide increased flexibility and adaptability for the end user, with a focus on streamlining the overall workflow process and enhancing the overall user experience.”

- ✅ “We need to improve the module so it’s easier to use and can handle a wider variety of tasks, making the user’s workflow smoother.”

- Be intentional with everything you say.

- ❌ “I think if we decide to make this change, our downstream systems might suffer.”

- ✅ “This change increases latency by 20%, breaking service X.”

― Build Your Credibility As You Grow - Leadership Letters [Link]

Harris makes a big mistake by embracing price controls - Noahpinion [Link]

The main supporting points:

- Ineffectiveness of Price Controls: The article argues that price controls on groceries are likely to be either ineffectual or harmful. If implemented, they could lead to shortages as grocery stores operate on razor-thin profit margins. If these stores are forced to sell goods at a loss due to government-mandated price controls, they might reduce supply, leading to empty shelves and potential scarcity, similar to situations seen in the Soviet Union and Venezuela.

- Inflation is Already Under Control: The article points out that grocery inflation has already stabilized, with prices having flatlined since early 2023. This suggests that the problem Harris is trying to solve with price controls—rising grocery prices—doesn’t actually exist anymore. Implementing price controls now could be seen as a solution in search of a problem, leading to unnecessary economic distortions.

- Legal and Political Risks: Harris’s proposal involves using the Federal Trade Commission (FTC) to enforce price controls, which the article argues is beyond the agency’s current legal authority. This could require new legislation, potentially face legal challenges, and expand the FTC’s powers in ways that could be problematic. The risk is that this could lead to a slippery slope of further government intervention in the economy.

- Historical Precedents: Historical examples of price controls, such as in Argentina and the U.S. during the 1970s, show that they often lead to short-term price reductions followed by inflation and shortages once the controls are lifted. The article argues that these historical precedents suggest that the best-case scenario for Harris’s proposal is negligible impact, while the worst-case scenario is significant economic disruption.

- Misguided Focus on Grocery Stores: The article highlights that grocery stores are not the main culprits behind price increases, as their profit margins are extremely low. The real issue may lie with other parts of the supply chain, such as food processors, where there is more evidence of monopoly power. Thus, targeting grocery stores with price controls may not address the root causes of price increases.

Articles and Blogs

In the Age of A.I., What Makes People Unique? - The New Yorker [Link]

How To Get Promoted (Without Getting Lucky) - The Developing Dev [Link]

How to Get Rich (without getting lucky) - Naval @ X [Link]

Key takeaways: 1) know what you organization considers impactful, 2) learn to sell your ideas, set directions, grow and help others, 3) build your brand by embracing accountability and sharing your results, 4) become a good collaborator and be transparent to your manager about goals and gaps, 5) protect your focus time - “what you work on is more important than how hard you work“, do work that you enjoy and has impact.

Is Consistency Hurting Your Sustainability? - Leadership Letters [Link]

Key takeaways: 1) it’s ok to be inconsistent sometimes, you should update your plan that respects flexibility, balance priorities, or adjust your expectations, 2) Life is not a sprint, taking a pause and pushing goals to the future is not always bad, 3) consistency is about never giving up, 4) don’t set consistency as a goal, find out what is your real goal, so that accepting and developing “bounce-back” plan is possible

McKinsey’s 2024 annual book recommendations [Link]

Have selected some books and added them into my read list: 1) God, Human, Animal, Machine: Technology, Metaphor, and the Search for Meaning by Meghan O’Gieblyn, 2) Outlive: The Science & Art of Longevity by Peter Attia, 3) The Journey of Leadership: How CEOs Learn to Lead from the Inside Out by Dana Maor, Hans-Werner Kaas, Kurt Strovink, and Ramesh Srinivasan, 4) Slow Productivity: The Lost Art of Accomplishment Without Burnout by Cal Newport, 5) How Legendary Leaders Speak: 451 Proven Communication Strategies of the World’s Top Leaders by Peter D. Andrei.

Paid Advertising 101: A Guide for Startup Founders - Kaya [Link]

Building A Generative AI Platform - Chip Huyen [Link]

This blog post outlines common themes in building generative AI systems. It covers many of the building blocks a company should consider when deploying its models to production.

AI’s $600B Question - David Cahn, Sequoia [Link]

Calculating GPU memory for serving LLMs - Substratus [Link]

Social media for startup founders: A practical guide to building an online presence - a16zcrypto [Link]

Long Context RAG Performance of LLMs - Databricks [Link]

An AI engineer’s tips for writing better AI prompts - coda [Link]

Grok-2 Beta Release - X.AI [Link]

How to Prune and Distill Llama-3.1 8B to an NVIDIA Llama-3.1-Minitron 4B Model - NVIDIA Developer [Link]

A practitioner’s guide to testing and running large GPU clusters for training generative AI models - together.ai [Link]

Competing in search - Benedict Evans [Link]

This article takes a look at the many possible impacts the recent antitrust case against Google may have.

GitHub CEO Thomas Dohmke says the AI industry needs competition to thrive - The Verge [Link]

Databricks vs. Snowflake: What their rivalry reveals about AI’s future - Foundation Capital [Link]

How I Use “AI” - Nichalas Carlini [Link]

DeepMind research scientist shares practical techniques to augment your work with LLMs.

FlexAttention: The Flexibility of PyTorch with the Performance of FlashAttention - PyTorch [Link]

Use FlexAttention in PyTorch to achieve 90% of FlashAttention2 Speed.

Deploy open LLMs with Terraform and Amazon SageMaker - Philschmid [Link]

Sonnet 3.5 for Coding - System Prompt - reddit [Link]

How Product Recommendations Broke Google And ate the internet in the process. - Intelligencer [Link]

- Health.com’s Purifier Reviews: The article mentions that Health.com claims to have tested 67 air purifiers but provides no actual test data. This example supports the point that many product recommendations are not based on rigorous testing, undermining their credibility.

- HouseFresh’s Critique: HouseFresh published a critical assessment of its competitors, pointing out issues like subpar products being recommended due to brand recognition. This supports the argument that the affiliate marketing model is corrupting the integrity of product recommendations.

- Time Stamped and AP Buyline: These brands, operated by Taboola, are presented as examples of how even reputable organizations are now involved in affiliate marketing, blurring the lines between independent journalism and commercial content. This supports the point that the distinction between quality content and affiliate-driven recommendations is becoming increasingly unclear.

- SGE (Search Generative Experience): Google’s AI-powered search results, which aggregate and recommend products directly, are criticized for being misleading and overly simplistic. This supports the idea that even Google’s attempts to solve the problem are falling short, further complicating the search landscape.

These examples illustrate how the proliferation of affiliate-driven content has compromised the quality of online information, leading to a search experience that is more about driving sales than helping users make informed decisions.

Avoiding Bad Guys - Humble Dollar [Link]

The Berkshire Hathaway MBA - The Rational Walk [Link]

Pathway to a great investor.

Syntopical Reading is the highest form of reading because it involves reading a number of books on the same topic analytically and then placing the books in context in relation to one another and the overall subject. This level of reading has the potential to bring about insights that are not found in any one of the books when considered in isolation.

As an example relevant to investors, one might want to conduct an analytical reading of Benjamin Graham’s The Intelligent Investor and Philip Fisher’s Common Stocks and Uncommon Profits and then come to grips with the underlying themes expressed in both volumes while drawing conclusions on investing that might not appear in either book in isolation. This approach can, of course, be applied to other forms of literature including biographies. It is quite possible than a thorough analytical reading of Roger Lowenstein’s Buffett: The Making of an American Capitalist and Alice Schroeder’s The Snowball: Warren Buffett and the Business of Life could lead to insights about Warren Buffett that one could not achieve by reading one of these books in isolation.

― How to Read a Book: The Classic Guide to Intelligent Reading - The Rational Walk [Link]

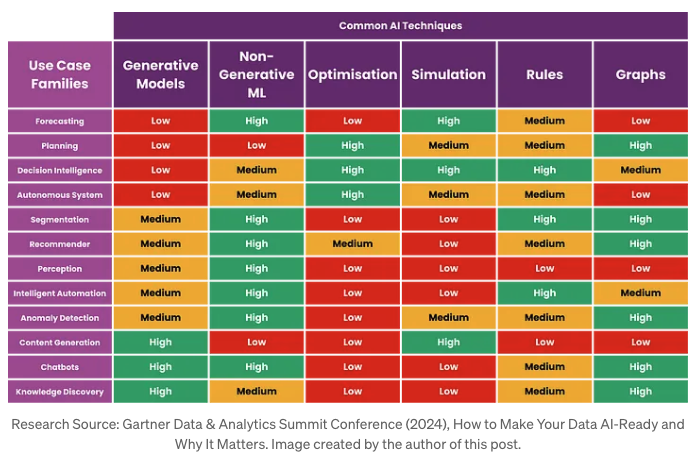

Do Not Use LLM or Generative AI For These Use Cases - Christopher Tao on TowardsAI [Link]

YouTube and Podcasts

Elon Musk: Neuralink and the Future of Humanity | Lex Fridman Podcast [Link]

Eight hours interview..

Kamala surges, Trump at NABJ, recession fears, Middle East escalation, Ackman postpones IPO - All-in Podcast [Link]

AI and The Next Computing Platforms With Jensen Huang and Mark Zuckerberg - NVIDIA [Link]

Nvidia CEO Jensen and Zuckerberg discuss the future of AI.

There’s a famous quote from an economist Simon Kuznets who said there’s four kinds of countries in the world there’s developed countries undeveloped countries Japan and Argentina. And I think the reason he said that is that Japan has been in the state since the 90s so they had a massive property and Equity bubble collapse. And they’ve not had to deal with anything that looked like typical economic issues since then and part of it is because the Govern plays a very big hand in the Japanese economy, there’s a lot of price controls there. So I don’t know I’m not sure what it is that we can learn there that you can extrapolate to the rest of the world. - Chamath Palihapitiya

When you have massive amounts of debt it definitely limits your flexibility. It’s just arithmetic, you are going to pay for it with either economic contraction, higher taxes, or inflation. Those are the three places it goes. - David Sacks & David Friedberg

Well so it looks like since the start of the year they’ve sold 55% of their Holdings in apple. And if you look at the end of the year, this is what berkshire’s stock Holdings were in their non-majority owned businesses. So businesses that they don’t own the business outright and 50% of their portfolio was in Apple at $174 billion. We obviously saw Apple’s stock price Peak highest level ever just a few days ago, but it has since come down as it was reported that since the start of the year. Now Berkshire sold 55% of this position, so some people are arguing that they’ve got a point of view on the company strategy and comp competitive kind of landscape. Some folks have argued that the valuation multiple has gotten too high trading at nearly 30 times earnings the stock has risen 900% since Berkshire bought the stock in 2016. Bagger nicely done yeah and some people would argue that the percent of the portfolio is too high at over 50%, as you can see here at the start of the year. But you know I’ll kind of provide some of the counterarguments you know Warren Buffett does not do much analysis on corporate strategy when he provides reviews of the stocks that he’s picked he often finds and talks a lot about great managers that generate great returns. And he sticks with them and he sticks with them sometimes for many many decades. The management in this company has not changed the return profile on cash invested and cash returned has only improved since he put money in. They’re generating more cash flow they’re offering more dividends they’re doing more stock BuyBacks and he’s happy to be concentrated over the years he’s made large bets on single companies to the point that sometimes he just outright buys the entire company like he did with. Geico in 1996 he always talks a lot about finding a company that is run by great managers that has a premium product with a nice high margin and a durable moat strong brand value. As I look at kind of what’s really gone on here it feels to me like the difference between Apple and some of the other big Holdings in its portfolio is that many of those other businesses are regulated monopolies. So BNSF Railway is regulated by the Federal Railroad Administration Berkshire energy which owns mid americ is a regulated utility. The prices that they charge consumers are set by the government so they have a market that’s locked in the prices are set they have locked in distribution they have locked in utility value and the same is true in the insurance business. Geico’s rates are approved and set effectively by state Regulators Berkshire has a moat because they’ve got the largest Capital base and they’ve got this machine that just keeps generating cash and the rates are publicly set by government Apple. However is not regulated and it is very clear that apple is facing very deep and severe Financial impact from the regulatory authorities that are overseeing the business so if you look at the Google antitrust we’re going to get into the Google deal in a second. There’s a real regulatory risk there because Google’s paying Apple $20 billion a year to be the default search engine. Apple also has a very deep relationship with China they have a lot of manufacturing being done in China and they sell a lot of product into China. So as Regulators start to take a harder look as they said they’re going to at companies relationships with China that’s a real risk to Apple. Advertising tracking users and then the subscription fees that are charged to Consumers and most importantly we’ve talked a lot about the 30% Vig that Apple takes on their App Store and how Regulators are now stepping in and take a look at this. So because this business is not yet a regulated Monopoly it may be a monopoly in many senses of the world it’s not regulated yet. And that transition could be financially painful for Apple once they get to the other side it starts to look a lot more like a large scale Burkshire type business. So that that’s my kind of summary take on what’s going on with apple. - David Friedberg

There’s very little kind of editorialization going on with respect to showing the rankings of the new sources. The ranking of the new sources is typically set by some ranking algorithm. The algorithm is usually around click-throughs views popularity of the sites, how many visitors there are, so there are other metrics that drive the order. So for example if NBC CNN Fox News all have kind of higher rankings than some smaller publication, they’re going to end up Hing the the ranking algorithm, because they have a higher quality score. There’s also measures on how often people click through and come back, the bounceback rate, so if they click through an article and then come back that can actually reduce the ranking versus if they click through and stay on the site. So there’s a lot of factors that go into the ranking algorithm. The thing that probably upsets people is that there isn’t any transparency into this, so there’s no understanding on how these things are ranked, how they’re set, and it’s probably very good guidance and feedback that there should be more transparency and openness. And I’m not necessarily trying to defend anyone’s product or behavior, I’m just saying that there’s a certainly a lack of understanding on why one thing is being shown versus another. I’ll also say Sach there’s probably the case or there might be the case that there’s many more sites potentially putting out pro Harris articles, and there are putting out pro Trump articles which can start to overweight the the algorithm as you know or overweight the rankings that are showing up. So that might also be feeding into this that that the general news media bias is what you’re actually seeing versus a Google bias. - David Friedberg

I just want to show you one chart because important for you to understand the number of people in journalism. This is from 1971 to 2022 who say the identifying Republican has just absolutely plummeted. I know this and this is what I’m trying to explain to you Sacks, is a incredible opportunity for your party since you know you’re passionate about this is to invest in more journalism, invest in more journalists, because I don’t buy this Independence the fact that they’re claiming they’re independent in journalism. I believe that’s cap I believe they say that the Gap is 33% now between people who say they’re Democrats and people who say they’re Republican in journalism that is a key piece to this problem. And layered on top of it, I agree with you that Google is filled with liberal people, and I agree with you Chamath, that they need to intervene and put at the top of the search results in news. These are the you know this is what we’re indexing, this is the percentage that’s left leaning, this is the percentage that’s right leaning, and there are a lot of organizations that examine and rate Publications on their bias left and right, And that’s something that Google could do that’s very unique and that could move the whole show that you’re saying which is they could showcase that up top. So to my friends at Google who are listening do a better job of just being more transparent, so we don’t have this tension in society. - Jason Calacanis

― Yen Carry Trade, Recession odds grow, Buffett cash pile, Google ruled monopoly, Kamala picks Walz - All-in Podcast [Link]

Interviewing Ross Taylor on LLM reasoning, Llama fine-tuning, Galactica, agents - Interconnects AI [Link]

Interviewing Sebastian Raschka on the state of open LLMs, Llama 3.1, and AI education - Interconnects AI [Link]

Here you can see that their (Starbucks) net revenue growth was only 1% year-over-year, but their operating margin’s been on the decline, so they have not really been able to boost their operating margin very much in the past 5 years. So while they’ve raised prices, they’ve had a really hard time making more money and that’s because the cost of food and the cost of Labor and the cost of rent, and the capital expenditures needed to upgrade stores has far exceeded the ability for them to grow revenue and compete. And now revenue is flatlining because consumers are getting tapped out with respect to how much they can spend and there’s only so much Innovation you can really do to charge more, get people to come in the store more and drive up revenue. - David Friedberg

Brian Nickel has an incredible reputation prior to Chipotle. He ran Taco Bell and he ran Taco Bell for several years and made it one of the most profitable Quick Serve Restaurants (QSR) in the world. He did this by focusing on every nickel. He is notorious for being a Cost Cutter, for being an efficiency driver, for being a productivity Hound. He goes into the business and he figures out every step in the supply chain, every step of the operating activities of the employee in the stores. So he was recruited heavily. I don’t know if you guys remember Chipotle’s founder was running Chipotle and at the time there was a lot of investor activism around Chipotle because they were wasting money like no one’s business. The guy had a private Jet, he was flying his management team back and forth between Denver and New York. They were spending money on crazy projects. And the board fired the CEO founder of Chipotle, brought in Nickel. Nickel came in and made Chipotle an incredibly profitable growing business. And the expectation is he’ll come and do the same here that maybe over the years Starbucks’s success has bred laziness. Starbucks’s success has bred fat slowness productivity decline, and that this guy is the right guy to come in and find all the nickels. And Brian Nichol is probably the right guy which is why you’re seeing the stock kind of rally as hard as it has. - David Friedberg

But the thing about Starbucks is they realized early on that when you can customize a consumer experience, the consumer comes back more frequently. So when you see your name written on that cup, you feel like you’re getting your product, you’re not buying an off-the-shelf product, you’re getting a custom personalized experience. What that led to is people customizing their drinks and what did they find that they liked when they customized their drinks sugary sweet add-ons. And then that became more and more of the standard menu and then that just kept evolving. And that’s just the consumer feedback mechanism working which is to Chamath’s point, led to 60 gram sugar drinks that are now the standard product at Starbucks, not an espresso or a cappuccino which is how they started, and it’s really unfortunate. - David Friedberg

So arguably I would say that trying to step in and cap prices will reduce competition, and as a result will reduce investment in improving productivity. And we have seen this countless times with every socialist experiment in human history has started with caps on food, and it has resulted in spread lines like you see in the image behind me today as we can see in Soviet Russia. This is a mistake, it is a problem, it is anti-American, it is anti-free Market, it is anti- innovation, it is anti- productivity, and ultimately it’s anti- liberty and I cannot stand it. - David Friedberg

To support your point, and what Chamath was messaging on our chat, look at Walmart stocks up 7% today, because they offer lower priced solutions to consumers, and Dollar General and Dollar Tree are rallying as well, when the market competes, consumers benefit, and there are companies that will win. And the companies that try to price gouge, and the companies that try to charge too much will lose. Starbucks has been trying to charge too much for sugar water, they have a real problem they are now tackling. Walmart is trying to bring value to consumers, they are winning. That is how free markets work. When the government steps in and says here’s how much margin you can make or here’s how much prices should be, it ruins everything, and the entire incentive structure goes away, and you end up with breadlines. - David Friedberg

― Break up Google, Starbucks CEO out, Kamala’s price controls, Boeing disaster, Kursk offensive - All-in Podcasts [Link]

I think the thing that matters more than anything else is to make sure that the people that they are letting in are in love and obsessed with the things that MIT is supposed to be great at. … You should not be going to MIT because you think it’s a check mark. You should be going there because you think that there are professors in organic Chemistry, in physics, in these disciplines that are really important who are experts in their fields that you can learn from and become an expert yourself. And I think the problem with all of this other stuff is once you make it a credential, there are some folks that are only going to MIT because they could get in and because it’s a great credential in their minds and they shouldn’t go there either. So I think the thing is you have to get back to what matters which is there are all of these industries that have not progressed that much. And in order for those Industries to advance you need really talented young people who can learn an apprentice and then take over. And I think MIT is one of these rare places that focuses on this part of the physical world that hasn’t had as much progress. And so I just want to make sure that the people that go there actually want to be there for that reason gender race all that other stuff shouldn’t matter. - Chamath Palihapitiya

Kamala Harris and Tim Waltz have only ever work for government, Trump and Vance have worked in Private Industry. It’s not just their perspective being colored by the the lack of participation in the private economy, but the lack of employment in the private economy, they’ve never worked for a private business, they’ve never been employees of a private business, they’ve never built a private business. I’m not trying to be disparaging but I do think I’m just trying to underline the point here Chamath which is the voter’s choice is do you want candidates that are not typically government operatives, or do you want candidates that have spent their whole career as government operatives. And that is effectively what the voters are going to be voting for. And they’re going to make a decision they may want to have someone that’s going to lead the biggest government in history, because they’ve spent their whole careers in government. Or they’re going to say you know what the biggest government in history needs to be significantly altered, and we want to bring someone in from the outside that’s worked in Private Industry. And that is the voter’s choice. That’s one way to view the voter’s choice here. - David Friedberg

I think just because someone has served in Government doesn’t mean that they truly even understand what the problems are, or that they’re even the master of government. I mean you saw this over the past week we talked about the 88,000 jobs that didn’t exist. They asked Gina Rundo the Secretary of Commerce about this and she just said that’s a Trump lie, and they said no actually it’s the Bureau of Labor Statistics report that like is under US Secretary of Commerce. She said I’m not familiar with that, so you have people running the government who don’t even know what their own departments are doing. Now I think it’s just a function of the fact that the government is so big and out of control that no one even understands what it does. I think it’s more important to have someone who at least has some experience in the private sector who truly understands how jobs are created, how wealth is created, what causes inflation, okay. We’ve talked about this before, what causes inflation is the printing of too much money, it’s government spending too much, it is not corporate greed, because corporate greed as a constant, it’s not price gouging. - David Sacks

And how the free market incentivizes the creation of improved productivity which over time translates into improved prosperity for the society within which that is taking place that is so critical and we saw that happen even in China in the last 30 years when the government allowed entrepreneurship to flourish in certain parts of the country. As a result there were significant productivity gains and they brought a billion people out of poverty - they created a middle class. - David Friedberg

― Massive jobs revision, Kamala wealth tax, polls vs prediction markets, end of race-based admissions - All-in Podcasts [Link]

Why We Don’t Own Coupang Stock (CPNG) - Chit Chat Stock Podcast [Link]

Good analysis of CPNG’s business, advantages and disadvantages, future and expectation.

Track Record and Risk w/ Guy Spier - We Study Billionaires [Link]

# 361 Estée Lauder - Founders [Link]

Joe Carlsmith - Otherness and control in the age of AGI - Dwarkesh Podcast [Link]

Paper and Reports

Gradient Boosting Reinforcement Learning [Link]

Gradient-Boosting RL (GBRL) brings the advantages of GradientBoosting Trees (GBT) to reinforcement learning.

SpreadsheetLLM: Encoding Spreadsheets for Large Language Models [Link]

Microsoft releases SpreadsheetLLM, a model designed to optimize LLMs’ powerful understanding on spreadsheets. It’s a great paper that outlines how you can turn a spreadsheet into a representation that is useful to a modern LLM. This can be used for Q/A, formatting, and other data operations.

The core innovation in SpreadsheetLLM is the SheetCompressor module, which efficiently compresses and encodes spreadsheets. It includes 1) Structural-anchor-based compression, 2) Inverse index translation, 3) Data-format-aware aggregation.

OpenAI Revenue [Link]

The Llama 3 Herd of Models - Meta Research [Link]

This is a 92 pages paper, a comprehensive guide for LLM researchers and engineers.

SPIQA: A Dataset for Multimodal Question Answering on Scientific Papers [Link]

KAN or MLP: A Fairer Comparison [Link]

Controlled study finds MLP generally outperforms KAN across various tasks. MLP outperformed KAN in machine learning (86.16% vs. 85.96%), computer vision (85.88% vs. 77.88%), NLP (80.45% vs. 79.95%), and audio processing (17.74% vs. 15.49%). KAN excelled only in symbolic formula representation (1.2e-3 RMSE vs. 7.4e-3).

NeedleBench: Can LLMs Do Retrieval and Reasoning in 1 Million Context Window? [Link]

The problem of current evaluation methods is that they are inadequate for assessing LLM performance on long context, however reasoning on long texts becomes more and more demanded. So they present a framework called NeedleBench for evaluating the long-context capabilities of LLMs across extensive text lengths. By some experiments, they find that current LLMs are struggling with complex reasoning tasks when it comes to long texts, showing a potential improvement room for LLMs.

Retrieval Augmented Generation or Long-Context LLMs? A Comprehensive Study and Hybrid Approach [Link]

This study investigates how large language models handle question-answering tasks under two conditions: when they receive comprehensive context information (long-context) versus when they are given only selected chunks of the necessary information (RAG). It shows that long context surpasses RAG significantly for Gemini-1.5-Pro, GPT-4O and GPT-3.5-Turbo.

A Survey of Prompt Engineering Methods in Large Language Models for Different NLP Tasks [Link]

This paper summarizes 38 prompt engineering techniques for LLM reasoning and lists the types of problems and datasets they have been used with.

Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More? [Link]

This paper explores the capabilities of long-context language models (LCLMs) in handling tasks traditionally dependent on external tools like retrieval systems, RAG (Retrieval-Augmented Generation), and SQL databases. It reveals that LCLMs, such as Gemini 1.5 Pro, GPT-4o, and Claude 3 Opus, can perform competitively with specialized models in tasks like retrieval and RAG. In particular, at the 128k token context length, LCLMs rival the performance of state-of-the-art retrieval systems and even surpass some multi-modal retrieval models. However, LCLMs struggle significantly with more complex tasks requiring multi-hop compositional reasoning, such as SQL-like tasks. The findings also highlight the importance of prompt design, as performance can vary greatly depending on the prompting strategies used.

Apple Intelligence Foundation Language Models - Apple [Link]

This report describes the architecture, the data used to train the model, the training process, how the models are optimized for inference, and the evaluation results, for the foundation language model developed to power Apple Intelligence features.

Notice that Apple includes the fundamentals of their RL methods, including a different type of soft margin loss for the reward model, regularizing binary preferences with absolute scores, their rejection sampling algorithm (iTeC) that is very similar to Meta’s approach, and their leave-one-out Mirror Descent RL algorithm, MDLOO.

Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities [Link]

This survey provides an in-depth review of model merging techniques, an increasingly popular method in machine learning that doesn’t require raw training data or expensive computation.

Causal Agent based on Large Language Model [Link]

Causal Agent is an agent framework equipped with tools, memory, and reasoning modules to handle causal problems.

Interactive visualization tool designed for non-experts to learn about Transformers.

The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery - Sakana.AI [Link]

Transformers in music recommendation - Google Research [Link]

A Comprehensive Overview of Large Language Models [Link]

A Survey on Benchmarks of Multimodal Large Language Models [Link]

This paper provides an extensive review of 180 benchmarks used to evaluate Multimodal Large Language Models.

Qwen2-VL: To See the World More Clearly - QwenLM [Link]

The model comes in three sizes : 2B and 7B (open-sourced under Apache 2.0 license), and 72B (available via API).

Performance:

- Qwen2-VL demonstrates state-of-the-art performance on visual understanding benchmarks. The 72B model surpasses GPT-4o and Claude 3.5-Sonnet on most metrics.

- The 7B model achieves top performance in document understanding and multilingual text comprehension. Even the 2B model shows strong performance in video-related tasks and document understanding.

Key Capabilities:

- Processing videos over 20 minutes long

- Complex reasoning for device operation (e.g., mobile phones, robots)

- Multilingual text understanding in images (European languages, Japanese, Korean, Arabic, Vietnamese)

- Function calling for integration with external tools

- Document understanding and general scenario question-answering

- Integrates with Hugging Face Transformers and vLLM.

- Supports various tools for quantization, deployment, and fine-tuning, making it accessible for ML engineers and researchers to implement and customize.

Two key architectural innovations:

- Naive Dynamic Resolution support allows Qwen2-VL to handle arbitrary image resolutions by mapping them to a dynamic number of visual tokens. This ensures consistency between input and image information.

- Multimodal Rotary Position Embedding (M-ROPE) enables concurrent capture of 1D textual, 2D visual, and 3D video positional information.

GitHub

Multimodal Report Generation Agent - Llamaindex [Link]

Building a RAG Pipeline over Legal Documents - Llamaindex [Link]

How to generate images with FLUX: the most photorealistic text-to-image model available

Simple Method: Use Replicate or FAL for inference via your browser.

HuggingFace Method

- Clone this Google Colab notebook.

- Change the Runtime to A100 GPU: FLUX.1 requires 32GB of GPU RAM to run, so ensure you select the A100 GPU runtime.

- Run the notebook.

- Change the prompt, currently set to: “A modern, minimalist house with large windows and a flat roof.”

GraphRAG Implementation with LlamaIndex [Link]

LlamaIndex releases notebook implementation of Microsoft’s GraphRAG.

Step-By-Step Tutorial: How to Fine-tune Llama 3 (8B) with Unsloth + Google Colab & deploy it to Ollama - reddit [Link]

Notebooks: Using Mistral Nemo with 60% less memory - Nvidia [Notebook1] [Notebook2]

Nvidia has released two free notebooks for Mistral NeMo 12b, enabling 2x faster finetuning with 60% less memory. Mistral’s latest free LLM is the largest multilingual open-source model that fits in a free Colab GPU.

Prompt Engineering Interactive Tutorial - Anthropics [Link]

News

Google has an illegal monopoly on search, judge rules. Here’s what’s next - CNN [Link]

Berkshire Hathaway sells off large share of Apple and increases cash holdings - The Guardian [Link]

Having accurate, reliable benchmarks for AI models matters, and not just for the bragging rights of the firms making them. Benchmarks “define and drive progress”, telling model-makers where they stand and incentivising them to improve, says Percy Liang of the Institute for Human-Centred Artificial Intelligence at Stanford University. Benchmarks chart the field’s overall progress and show how AI systems compare with humans at specific tasks. They can also help users decide which model to use for a particular job and identify promising new entrants in the space, says Clémentine Fourrier, a specialist in evaluating LLMs at Hugging Face, a startup that provides tools for AI developers.

― GPT, Claude, Llama? How to tell which AI model is best - The Economist [Link]

Current benchmark MMLU (massive multi-task language understanding) has a few problems: 1) too easy for today’s models leading to the problem of ‘saturation’. New alternatives are developed such as MMLU-Pro, GPQA, MUSR, etc, 2) training data comes from internet which is a source of questions and answers for MMLU, resulting in a problem called “contamination”, 3) answers in MMLU tests are sometimes wrong or correct answers are more than one, 4) small changes in the way questions are posed to models can significantly affect their scores.

There are some trustworthy automated testing systems other than ChatBotArena leaderboard: HELM (holistic evaluation of language models) built by Dr Liang’s team at Stanford, and EleutherAI Harness uses by Dr Fourrier’s teams at Hugging Face for open source models.

As model gain new skills, new benchmarks are being developed to assess them. For example, GAIA tests model on real world problem solving, NoCha provides novel challenge, etc. However, new benchmarks are expensive to develop because they require human experts to create a detailed set of questions and answers. Dr Liang is working on project AutoBencher, Anthropic started funding the creation of benchmarks with a focus of AI safety.

GPT-4o mini: advancing cost-efficient intelligence - OpenAI News [Link]

GPT-4o is small and intelligent. It’s probably distilled from current or unreleased version of OpenAI’s models, similar to what Claude did with Claude Haiku and Google with Gemini Flash.

Made by Google 2024: Pixel 9, Gemini, a new foldable and other things to expect from the event - TechCrunch [Link]

MIT releases comprehensive database of AI risks - VentureBeat [Link]

Apple will let other digital wallets into Apple Pay, and even be the default - ars technica [Link]

Apple Aiming to Launch Tabletop Robotic Home Device as Soon as 2026 With Pricing Around $1,000 [Link]

Sakana AI’s ‘AI Scientist’ conducts research autonomously, challenging scientific norms - VentureBeat [Link]

Sakana AI unveils world’s first fully automatic ‘AI-Scientist’ generating complete research papers.

Scaling Laws, Economics and the AI “Game of Emperors.” - Gavin Baker [Link]