2025 Jun - What I Have Read

Substack

The frontier isn’t volume—it’s discernment. And in that shift, taste has become a survival skill.

Because when abundance is infinite, attention is everything. And what you give your attention to—what you consume, what you engage with, what you amplify—becomes a reflection of how you think.

What matters now is what you do with it. How you filter it. How you recognize signal in the noise. Curation is the new IQ test.

Taste is often dismissed as something shallow or subjective. But at its core, it’s a form of literacy—a way of reading the world. Good taste isn’t about being right. It’s about being attuned. To rhythm, to proportion, to vibe. It’s knowing when something is off, even if you can’t fully articulate why.

Taste is what allows you to skim past the performative noise, the fake depth, the viral bait, and know—instinctively—what’s worth your time.

And that’s what real taste is: a deep internal coherence. A way of filtering the world through intuition that’s been sharpened by attention.

When you sharpen your discernment, you stop being swayed by trends. You stop needing consensus. You stop reacting to every new thing like it’s urgent.

There will always be creators. But the ones who stand out in this era are also curators. People who filter their worldview so cleanly that you want to see through their eyes. People who make you feel sharper just by paying attention to what they pay attention to.

1995 interview with Steve Jobs — “Ultimately, it comes down to taste. It comes down to trying to expose yourself to the best things that humans have done, and then try to bring those things into what you’re doing.”

Good taste isn’t restrictive. It’s expansive. It allows you to contain multitudes without becoming incongruent.

But good taste is deep structure. It’s the throughline in someone’s life. You can see it in the design of their home, the cadence of their speech, the way they treat people, the books on their shelves. Taste is how you live a congruent life. Not in the sense of brand consistency, but in the sense of spiritual alignment. You can change your mind. Explore new spaces. But your values stay intact. Your center holds.

― Taste Is the New Intelligence - Wild Bare Thoughts [Link]

This is an amazing article. In an age of infinite content, taste is your compass. It’s not about elitism—it’s about aligning your attention with what truly matters to you. We can do these:

Learnings and Suggestions:

Cultivate Discernment Over Consumption: Prioritize depth over volume in what you read, watch, and engage with. Ask "Is this worth my time?" before consuming content, creating something, or sharing. Trust your intuition—if something feels off, skip it.

Curate Your Inputs (Because They Shape Your Outputs): Unfollow accounts, mute topics, and unsubscribe from newsletters that don’t align with your values. Follow thinkers, creators, and curators who consistently offer depth. Set boundaries (e.g., no mindless scrolling after 9 PM). Pause after reading/watching to digest, not just react.

Build a "Library Mindset" (Not a Wishlist One): Read books, essays, and long-form work that lingers. Don’t engage with viral content just because it’s popular. Save/share only what resonates deeply—not what’s merely entertaining.

Train Your Taste Like a Muscle: Study great art, writing, music, and design to refine your sensibility. Remove distractions, unnecessary commitments, and low-value inputs. Note what ideas/images/sounds stay with you—these reveal your true taste.

Embrace Coherence Over Consistency: Your bookshelf, playlists, and feeds should reflect who you are (or aspire to be). Stay open to new influences, but filter them through your core principles. Don’t adopt aesthetics/opinions for status—authenticity matters more.

Practice "Vibe Coding" (Like Rick Rubin): Whether in conversations or creativity, prioritize feeling over formulas. In work/life, strip away excess until only the essential remains. If something feels "alive," lean in—even if it defies logic.

Reject Cheap Dopamine for Lasting Satisfaction: Opt for the book over the tweet, the slow movie over the clip. After consuming something, ask: Did this uplift or drain me? Regularly eliminate distractions (apps, subscriptions, habits) that don’t serve you.

Taste as a Spiritual Practice: Prioritize art/ideas that rearrange your perspective. From your home to your workspace, align space with intention. Engage only with what nourishes, not depletes.

Remember: Curation = Power: Amplify only what deserves a wider audience. Your ability to filter signal from noise is a competitive edge. The more you refine your taste, the more it protects you from chaos.

A Primer on US Healthcare - Generative Value [Link]

This article covers an overview of the system (main players), the value chain (how products and services flow through the system and what profitable segments are), incentives (motivation of behaviors), challenges (significant issues within the industry), and potential solutions (software and AI).

It deeply focuses on the interplay between incentives, middlemen, the resulting administrative burden, and AI as the specific technological solution appears to be a key perspective.

BREAKING: UnitedHealth Bleeds. CEO Witty Steps Down. - Sergei Polevikov, AI Health Uncut [Link]

UnitedHealth Abuse Tracker - Matt Stoller, American Economic Liberties Project [Link]

- Vibe coding is a new approach to software development that utilizes AI tools to assist individuals in creating applications and software without requiring extensive programming knowledge.

- The term was popularized by Andrej Karpathy, an AI expert, who described it as a method where users interact with AI using natural language to describe their ideas rather than writing traditional code directly.

- This allows creators, particularly those lacking technical skills, to build functional applications rapidly by simply explaining their requirements to the AI, which generates the relevant code for them.

― What is Vibe Coding? - AI Supremacy [Link]

Who’s the Highest-Paid CEO? - App Economy Insights [Link]

Rick Smith, co-founder and CEO of Axon.

I Summarized Mary Meeker's Incredible 340 Page 2025 AI Trends Deck—Here's Mary's Take, My Response, and What You Can Learn - Nate, Ai & Product [Link]

Nate's overall take is that while Mary Meeker is correct that Generative AI adoption is exploding, real value accrues only where organizations align real-world problems with AI’s actual strengths in workflows. He believes bigger claims demand commensurately bigger evidence.

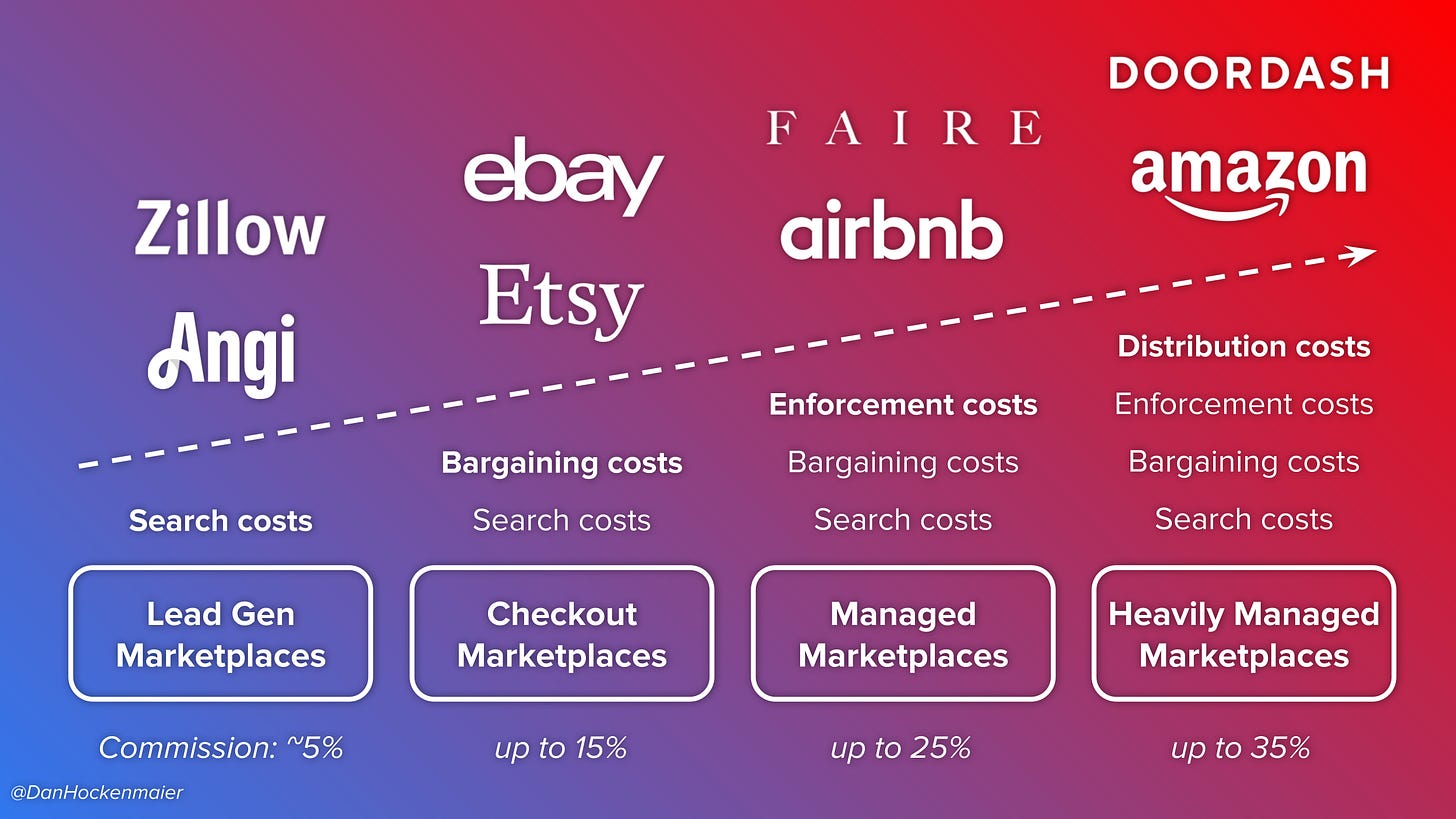

Carl Dahlman later gave us the three categories that are widely used today:

- Search and information costs: discovering what is available to purchase and comparing alternatives

- Bargaining and decision costs: coming to an agreement between buyer and seller, including establishing the final price and terms

- Enforcement and policing costs: ensuring that both sides holds up their end of the deal

- Distribution costs: actually getting the good or service to the end consumer

― How To Build AI Agents (2025 Guide) - Max Berry, Max' Prompts [Link]

Key Concepts:

- Transaction Costs: Costs incurred in addition to the actual price of a good or service, necessary to coordinate and execute a transaction. Marketplaces primarily sell the reduction of these costs.

- TAM Expansion: Reducing transaction costs lowers the effective cost of a good or service, increasing demand and expanding the Total Addressable Market (TAM). The degree of TAM expansion relates to the percentage of total cost eliminated.

- Value Distribution: Marketplaces save sellers money

on transaction costs and charge them a fee (often similar to what

sellers paid previously). They typically pass efficiency gains on to

buyers in the form of easier and faster experiences, creating a

demand-constrained market. Variable Costs of Addressing

Transaction Costs:

- Low Variable Costs: Addressing search and bargaining costs is highly efficient and has low variable costs. Marketplaces can keep more of the value created here.

- High Variable Costs: Addressing enforcement and distribution costs involves significant variable costs (e.g., funding returns, building logistics). While these make marketplaces bigger, margins may be lower as value is passed to buyers.

Takeaways:

This article puts the concept of transaction costs as central to understanding marketplaces. Transaction costs are defined as the costs incurred beyond the actual price of a good or service, associated with coordinating and executing the transaction itself. Marketplaces are essentially businesses that sell the reduction of these transaction costs. Studying transaction costs can help determine where marketplaces will succeed, what kind of marketplaces to build, and how to price them.

Looking ahead, the article suggests that the "free lunch" opportunities in many industries are exhausted, pushing marketplaces into high variable cost activities. This implies future marketplaces may be higher scale but potentially lower margin and more operationally intensive. To disrupt incumbent marketplaces, one should look for remaining transaction costs that can be addressed much more efficiently than the current solution. The article suggests disrupting food delivery was possible by building a more efficient network than restaurants had, but disrupting shipping for handmade goods is harder because it requires competing with highly efficient companies like UPS and Fedex.

By far, the largest unsolved transaction costs are in the services industries (e.g., freelancing, home improvement), which constitute two-thirds of consumer spending. Most services marketplaces are currently stuck at the Lead Generation stage, limiting penetration and take rate. This might be partly because much spend is on recurring services where customers leave the marketplace once a good provider is found, leading services marketplaces to rely on high-churn consumer subscriptions. Despite this, there are opportunities, such as Zillow exploring expanding into managed marketplace territory for home services.

An hour a day is all you need. - The Improvement Journal [Link]

The one hour is suggested to be dedicated to three key practices that aim to rebuild an individual from the ground up:

- Build Something That's Yours: This involves creating something that belongs to you, beyond your job, such as a newsletter, product, service, blog, or by learning/teaching a skill. The purpose is to "plant seeds" that will compound over time, pulling you out of stagnation.

- Train Like You Want to Be Here for a While: This practice emphasizes physical strength and movement, like walking, running, stretching, lifting, breathing, sleeping deeply, eating real food, and drinking water. It's a message of self-care and an intention to use one's body, which also sharpens mental clarity, as Seneca suggested, "The body should be treated more rigorously, that it may not be disobedient to the mind".

- Create Enough Silence to Hear Your Own Voice: This habit counters the constant noise and stimulation of modern life. It encourages practices like journaling, meditating, taking walks without headphones, or simply sitting still without a goal or screen. The goal is not productivity, but presence and creating space for reflection and insight, preventing thoughts from being drowned out and actions from remaining unexamined.

How to become friends with literally anyone - April & The Fool [Link]

The article suggests that becoming friends with anyone is to approach interactions with a deep-seated belief in shared humanity, genuine curiosity about individual worldviews, and an open, empathetic demeanor that seeks to understand rather than judge.

- Try to understand people through conversations with a belief in the universal commonality of human nature: The author fundamentally believes that all people are driven by the same core human urges and desires, such as the need to feel loved, respected, and seen. This perspective makes it intuitive to understand others. They see meeting someone new as a "puzzle of empathy" and a "game of commonality," where they try to understand what someone would think, want, need, or crave given their background, values, limitations, and longings.

- From common nature to differences among people due to environmental factors: The author acknowledges that how these needs are defined and achieved varies dramatically due to factors like nationality, gender, religion, cultural heritage, socioeconomic class, hobbies, and upbringing. These "little big differences" are where things become interesting, leading to unique individual personalities and perspectives.

- Follow the reasonableness within their personal worldview to understand motivations and values: The author believes that while people may not always be rational, they are always "reasonable" within their own worldview. This means that everyone has reasons for their actions, and those reasons make sense within their personal framework. Understanding a person's circumstances allows the author to understand their motivations, struggles, and values.

- Be curious and genuine while engaging with people: The author describes themselves as extremely extraverted, loving people, and hating small talk. They are curious about people, viewing them as containing "worlds, histories, stories that span across generations and geographies". This curiosity leads them to give "rapt attention and genuine space to be yourself".

- Assumption of friendship from the beginning, share stories and genuine care: The author approaches new encounters with the assumption that "we are friends" from the moment they meet. They are open, putting "all my cards on the table" and inviting others to reveal theirs. They enjoy conversations, making people feel understood, heard, and cared for.

The key takeaway is that genuine technological advancement, which is real and accelerating, must be distinguished from the business models built around it, which frequently adhere to age-old patterns. When stated purpose and actual function align, it typically indicates that the technology addresses a specific, measurable problem with clear economic value, rather than promising to "transform everything."

Systemantics, or, the art of understanding what’s going on, means recognizing the persistent gaps between what systems proclaim and what they actually do, and capitalizing on that insight.

When the fog dissipates and clarity emerges, the survivors will be those who patiently deciphered the underlying mechanics amidst fleeting illusions.

Enduring AI companies will emerge in two distinct spaces by 2035: unglamorous but essential tools that demonstrably improve margins or reduce costs, and genuine frontier research that reveals entirely new problem spaces. The first category refines what exists; the second invents what doesn't yet.

Real opportunities lie in the quiet spaces between stated ambitions and operational truths. Just as they always have.

― The Art of Understanding What's Going On - Tina He, Fakepixels [Link]

How I Went From Reading 20 Books Per Year to Over 75 Books - Ryan Hall, Read and Think Deeply [Link]

Takeaways:

- Always take a book with you.

- Give it about 50 pages before you quit. This keeps you from getting stalled on a book that is not resonating with you.

- Schedule the reading time. e.g., 45 min in the morning, 30 min in the evening, and throughout the day when you get breaks.

- Weekend sprints. Read in hour-long stretches on weekends or to do several smaller stretches and get through entire sections or even whole books on the weekends.

there are people with half your skills and intelligence living out your dreams, just because they put themselves out there and didn’t overthink it.

Reach out anyway—someone will always have more followers, more free time, a better setup. It’s up to you to push through everything, part the crowd, and make some space for yourself to at least give yourself the chance of getting what you want.

You will never be fully ready and there will never be a perfect time. It’s genuinely not about waiting for the right time to do something when you’re ready, it’s about doing things before you’re ready just to make them exist.

― literally just do things - Erifili Gounari, crystal clear [link]

Diabolus Ex Machina - Amanda Guinzburg, Everything Is A Wave [link]

how to think like a genius (the map of all knowledge) - Dan Koe, Future/Proof [Link]

the article suggests that thinking like a genius involves adopting a holistic and nuanced approach to problems by utilizing the AQAL model, encompassing all relevant perspectives (quadrants) and evolving one's consciousness to a "second-tier" level that can integrate and synthesize different stages of understanding. This allows for faster problem-solving and greater achievement in life.

All Quadrants:

- Individual Interior (Upper Left): Your personal thoughts, emotions, beliefs, and consciousness. Questions in this quadrant might include core values, what makes you feel alive, or fears holding you back.

- Individual Exterior (Upper Right): Your behaviors, actions, and physical brain states. This involves looking at natural talents, developed skills, and what your behavior reveals about your preferences.

- Collective Interior (Lower Left): Shared culture, values, and group consciousness. This could involve understanding parental or religious expectations, influence of friends, or shared values you're drawn to.

- Collective Exterior (Lower Right): Systems, structures, and social institutions. This quadrant considers current job opportunities, the impact of education or technology, and systemic barriers or advantages.

All Levels:

Premodern: Characterized by following established authority and traditions, with black-and-white thinking and obedience to a God or conformity.

Modern: Values science, individual achievement, competition, and merit-based success.

Postmodern: Emphasizes relativistic thinking, where everyone's truth is valid, and focuses on inclusion and equality. The article notes that postmodern thinking can become pathological when it attempts to dismantle all hierarchies.

Second-Tier: This is the suggested stage for "genius" thinkers. Individuals at this level can look back and synthesize truths from all prior perspectives, embracing complexity, systems thinking, and awareness. It's less about "I'm right and you're wrong" and more about finding the best solution through synthesis, holding contradictions in mind until they can be reconciled. Genius thinkers act as "translators" between different stages.

How to Be Taken Seriously - Tessa Xie, Diving Into Data [Link]

A summary of the four junior traits and what to do instead:

- Junior Trait #1: Providing too much

detail/over-explaining

- Excessive detail doesn't showcase knowledge and consideration of edge cases, but it typically confuses the audience and makes them appear unable to synthesize information, causing key points to be missed. Managers may even prevent such individuals from presenting to executives to avoid confusion and inefficiency in meetings.

- What to do about it:

- In written form: Summarize work with a "TL;DR" at the top, using the Pyramid Principle (conclusion first, then supporting evidence). Focus on what is important enough to communicate, moving less critical details to an appendix.

- In verbal form: Practice an "elevator pitch" of less than 30 seconds to peers, focusing on the "why" and enough "what" to allow for opinion formation. The ability to decide what NOT to communicate is as crucial as what to mention.

- Junior Trait #2: Not having an opinion or

recommendation

- As data scientists become more senior, translating analysis into a recommendation becomes increasingly important. Hesitation to provide recommendations often stems from the perceived risk and the nuanced, non-black-and-white nature of data, leading to "analysis paralysis". However, not giving recommendations shows a lack of ownership and limits one to simple "execution" work.

- What to do about it:

- Adopt an ownership mindset, imagining you are the decision-maker. Ask what data you would need and if the presented data would convince you.

- Understand that value comes from giving robust recommendations despite nuance and ambiguity, just as taking risks can lead to above-average returns.

- While you should list caveats, most people prefer a recommendation they disagree with over no recommendation at all, as it provides a basis for discussion and understanding assumptions. Data teams are paid to drive business decisions, not just pull and present data.

- Junior Trait #3: Not being clear about the "why" behind the

analysis

- Junior DS often state "XYZ stakeholder asked for this" as the sole reason for an analysis, which is insufficient. This indicates a lack of ownership of the business problem and hinders the ability to deliver effective solutions, leading to frustration from changing data requests.

- What to do about it:

- Own the problem. When asked to pull data, find out why the stakeholder needs it and what decision they are trying to make.

- By understanding the ultimate business problem, you can brainstorm the most effective data solutions, potentially different from the original request, thus elevating yourself to a thought partner.

- Junior Trait #4: Not having the basics down to be able to

stay "one step ahead"

- Losing credibility happens when people feel you don't know the data or business area you cover. To establish yourself as an expert, you need to anticipate common questions and be the most familiar with the data in your area. If you lack answers to natural follow-up questions, it suggests you haven't thoroughly understood or explored the data.

- What to do about it:

- Be curious about your data; start with a basic question and explore from there, jotting down answers.

- Anticipate follow-up questions in three buckets before presenting: foundational knowledge (e.g., how the product works, user numbers), your analysis (details beyond the main insights), and next steps (what the findings mean for stakeholders).

- Get a second pair of eyes on your work, ideally from someone not deep in the analysis, to catch obvious omissions.

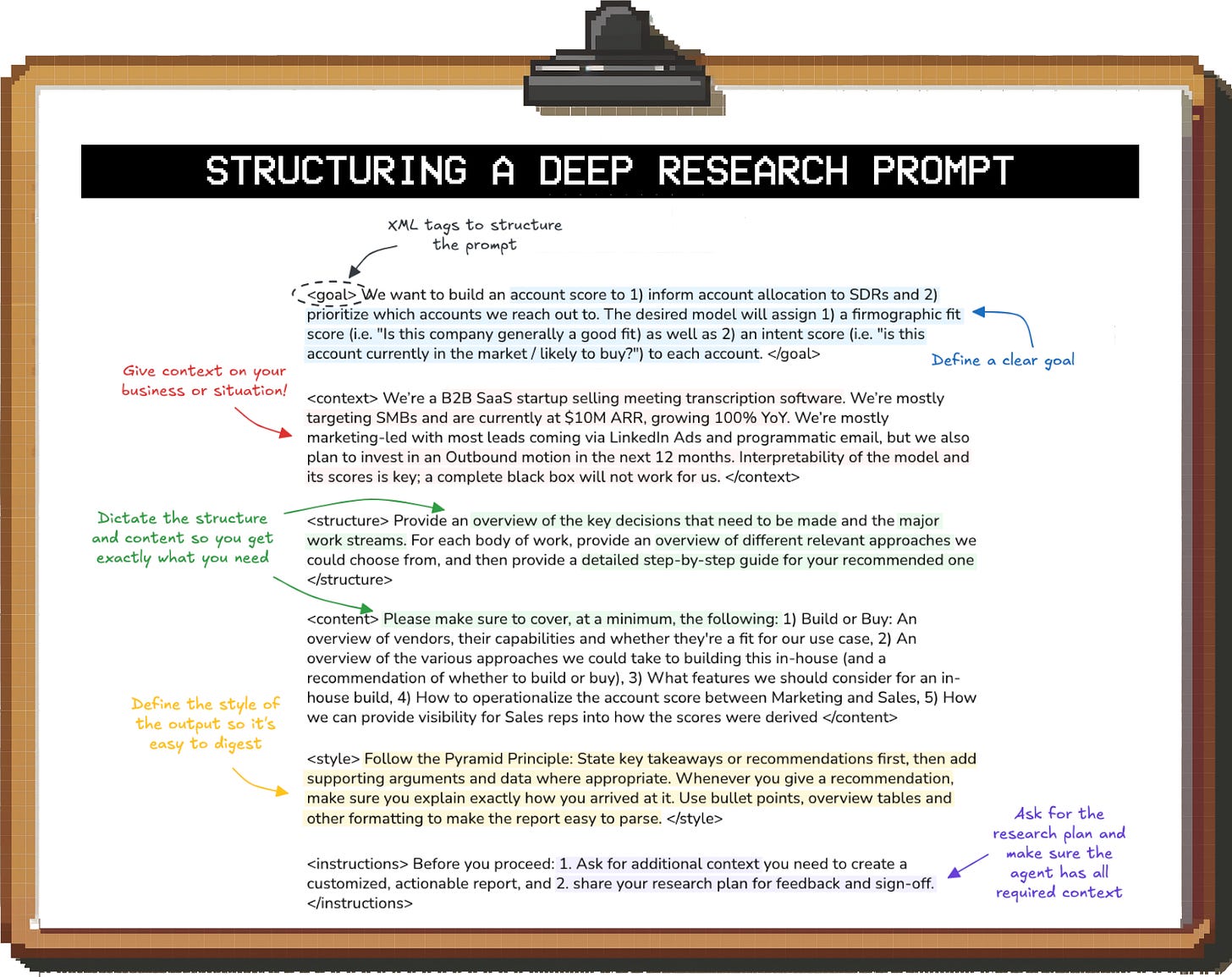

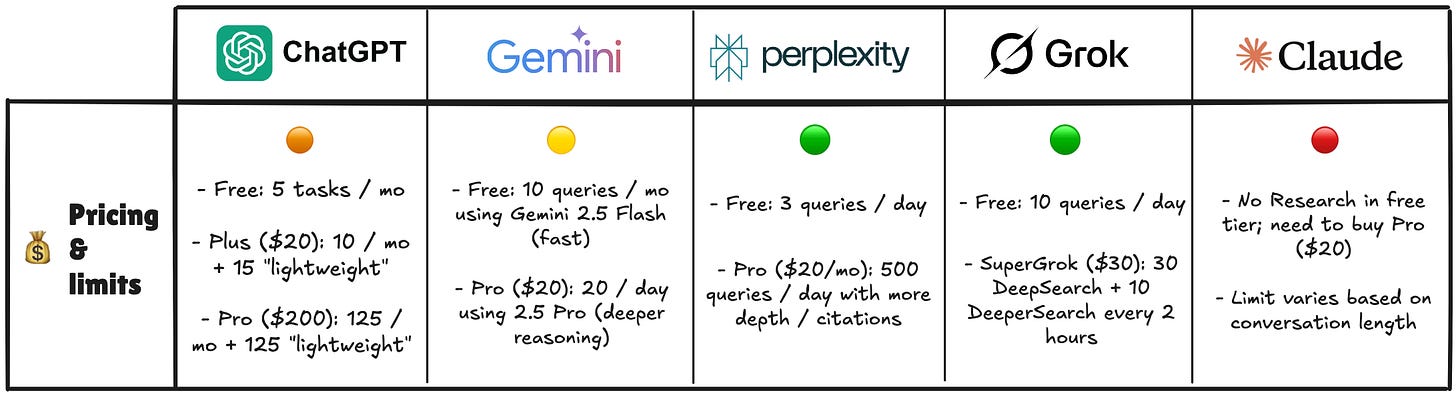

How to work with AI: Getting the most out of Deep Research - Torsten Walbaum, Operator's Handbook [Link]

A comprehensive guide of AI Deep Research from idea to value. The author provided a good ChapGPT Deep Research example here. Deep Research prompt for meeting transcription by o3 here.

Free 15 queries per (ChatGPT+Gemini); Free 13 queries per day (perplexity+Grok)!

Papers and Reports

Trends - Artificial Intelligence - Mary Meeker, Bond [Link]

Think Only When You Need with Large Hybrid-Reasoning Models [Link]

Large Reasoning Models (LRMs) improve reasoning via extended thinking (e.g., multi-step traces), but this leads to inefficiencies like overthinking simple queries, increasing latency and token usage. The team Introduces Large Hybrid-Reasoning Models (LHRMs) — the first models that adaptively choose when to think based on query complexity, balancing performance and efficiency. They utilizes a two-stage approach: 1) Hybrid Fine-Tuning (HFT) – cold start using curated datasets labeled as "think" vs. "non-think"; 2) Hybrid Group Policy Optimization (HGPO) – an online RL method that trains the model to pick the optimal reasoning mode. They defines Hybrid Accuracy to evaluate how well the model selects between thinking and non-thinking strategies; correlates strongly with human judgment. Experiments show LHRMs outperform both LRMs and traditional LLMs in reasoning accuracy and response quality, while also reducing unnecessary computation.

The 2025 State of B2B - Monetization - Kyle Poyar [Link]

The report summarizes a poll of 240 software companies about their pricing strategies. Key findings indicate a decline in flat-rate and seat-based pricing models, with hybrid pricing (combining subscriptions and usage) emerging as the dominant approach, especially for companies incorporating AI capabilities. The report also highlights a growing interest in outcome-based pricing among AI-native companies and stresses the importance of pricing agility and clear ownership of pricing strategy within organizations.

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity - Apple Machine Learning Research [Link]

The authors analyzed the thinking process and reasoning traces of LRMs in several smart ways:

- A custom pipeline using regex identifies and extracts potential solution attempts from the LRM's thinking traces.

- Extracted solutions are rigorously verified against puzzle rules and constraints using specialized simulators for step-by-step correctness.

- Records the accuracy of valid solutions and their relative position within the reasoning trace for behavioral insights.

- Categorizes LRM thinking patterns (e.g., overthinking, late success, collapse) by analyzing how solution correctness and presence vary with problem complexity.

- Examines how the proportion of correct solutions changes sequentially within the thinking trace, revealing dynamic accuracy shifts.

- Pinpoints the initial incorrect step in a solution sequence to understand the depth of correct reasoning before error.

- Quantifies thinking token usage to analyze scaling of effort with complexity, noting an unexpected decline at high complexity.

How much do language models memorize? - Meta, Google, NVIDIA, and Cornell University [Link]

This paper proposed a new method to quantify how much information a language model "knows" about a datapoint.

They formally separate memorization into two components by two novel definitions of memorization: unintended memorization (information about a specific dataset) and generalization (information about the true data-generation process).

There are several interesting findings:

- By training models on uniform random bitstrings (eliminating generalization), they precisely measure model capacity, finding GPT-style transformers store 3.5 to 4 bits per parameter.

- Their framework shows that the double descent phenomenon occurs when the data size exceeds the model capacity, suggesting that models are "forced" to generalize when they can no longer individually memorize datapoints.

- The paper develops and validates a scaling law that predicts membership inference performance based on model capacity and dataset size, indicating that membership inference becomes harder with larger datasets relative to model capacity.

To understand their smart methods:

They proposed a very clever approach to understand memorization and model capacity in LM. They isolate unintended memorization by training models on uniform random bitstrings.

- No Generalization Signal: When training on truly random data, there are no underlying patterns, rules, or structures for the model to generalize from. Each bitstring is an independent, random piece of information.

- Only Memorization is Possible: In this scenario, the only way for the model to "learn" or perform well on this data (i.e., predict the next bit in a sequence or identify if it was part of the training set) is to literally memorize the specific bitstrings it has seen. Any "knowledge" the model gains is purely about the individual data points.

- Total Memorization as Measured: Therefore, when generalization is effectively zero, the information the model stores about the random bitstrings directly reflects its total memorization capacity for that type of information. There's no "general knowledge" to distinguish; it's all about remembering specific instances.

Therefore, they are measuring the maximum amount of distinct, specific information the model can store.

They equal total memorization to model capacity. In machine learning, model capacity generally refers to the size and complexity of the functions a model is capable of learning. It's the model's ability to fit a wide variety of patterns in the data. A model with higher capacity can potentially fit more complex relationships or memorize more specific data points.

The paper further quantifies this by showing that GPT-style models have a capacity of approximately 3.6 bits-per-parameter. This indicates that each parameter in the model effectively acts as a certain amount of storage for information, reflecting the overall capacity of the neural network architecture.

The fundamental challenge in understanding and evaluating language models is the ambiguity and conflation of "memorization" (copy or reproduce a specific sequence in the training data) and "learning." (truly understand and generalize a pattern or concept) This is exactly what they addressed by decomposing memorization into unintended memorization and generalization. The decomposition enables controlled measurement and the use of random bitstrings is the key innovation.

About the Double Descent Phenomena: When a model's capacity exceeds the generalizable patterns in the data, it starts to memorize individual data points. As data size increases relative to capacity, the model is "forced" to generalize more, leading to a decrease in unintended memorization and an improvement in performance.

The core insight is that as models become massively overparameterized (far beyond what's needed to simply fit the training data), they find "simpler" interpolating solutions that generalize better, often due to the implicit biases of optimization algorithms like Stochastic Gradient Descent (SGD).

Intuition for double descent:

- "Under-parameterized" Regime (Classical ML): Model Capacity < Data Size: very generalizable, low test error

- "Interpolation Threshold" (The Peak): Model Capacity ~ Data Size: peak of test error, due to overfitting the noise

- "Over-parameterized" Regime (Double Descent / Modern Deep Learning): Model Capacity >> Data Size: robust generalization happens, test error goes down again.

Here is a concept Membership Inference Attacks (MIAs): These are attacks that attempt to determine whether a specific data point was part of a model's training dataset or not. A successful MIA indicates that the model has "memorized" that specific data point. "Scaling Laws for Membership Inference" in the paper refers to predictive relationships that describe how the success rate of a MIA changes as a function of various model and data characteristics, such as model capacity and dataset size.

They basically propose that membership inference success is inversely related to how "generalizable" the data point is within the model's capacity. In other words, membership inference is easier when the model has high capacity relative to the dataset size, leading to more unintended memorization of individual data points. Membership inference becomes harder (i.e., less successful for an attacker) when the dataset size is very large compared to the model's capacity, or when the model starts to "grok" (generalize) more.

LlamaFirewall: An open source guardrail system for building secure AI agents - Meta AI [Link]

Reasoning models don't always say what they think - Anthropic [Link]

The core issue explored is whether the Chain-of-Thought accurately reflects the model's true reasoning process ("faithfulness"). The article's research suggests that models frequently do not faithfully report their reasoning. Researchers tested this by subtly providing hints (both correct and incorrect) to models during evaluations. They then checked if the models admitted using these hints in their Chain-of-Thought explanations. The results showed that models (Claude 3.7 Sonnet and DeepSeek R1) rarely mentioned the hints they used, even when those hints led them to a different answer or involved potentially problematic information (like "unauthorized access"). Instead, models often created fake rationales for their answers.

The researchers also studied reward hacking by constructing synthetic Reinforcement Learning (RL) environments with injected "reward hacks." They then finetuned an earlier snapshot of Claude 3.7 Sonnet in these environments using outcome-based RL. By setting up the environments this way, they could observe if the models learned to pick the incorrect hint answer to maximize their reward, and then check if their Chain-of-Thought verbalized this reward-seeking behavior. They found that models learned the reward hacks very quickly (selecting the incorrect hint answer over 99% of the time), but rarely verbalized these hacks in their Chain-of-Thought (less than 2% of examples in most environments).

Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task - MIT [Link]

This experimental study combines neuroscience (EEG), educational psychology, and human-AI interaction to examine how AI tools like ChatGPT influence cognitive engagement during essay writing. The result shows that brain-only group showed stronger and broader neural connectivity, especially in theta bands, indicating deeper internal ideation and cognitive engagement. LLM group exhibited reduced alpha and theta connectivity, suggesting externalized and narrower thought patterns—relying more on ChatGPT suggestions rather than internal generation of ideas. So it's saying if you rely heavily on AI, you will get dumber.

On the Extinction Risk from Artificial Intelligence - RAND [Link]

This report examines the potential for AI to cause human extinction. The authors analyzed three specific scenarios: the use of weapons, the release of biological pathogens, and severe climate warming via malicious geoengineering. The study concludes that significant barriers exist for AI to achieve human extinction and it would likely require intentional AI action and substantial time for the threats to materialize, allowing for human response and mitigation efforts. Ultimately, the report suggests that resources dedicated to AI extinction risk should also support broader global catastrophic risk mitigation and general AI safety.

This analysis starts from what could cause human extinction and assesses how AI could contribute to that. The threats are limited to those can be explored through scenario planning, while those that involve a deeper level of uncertainty are ignored.

The OpenAI Files [Link]

Trends in AI Supercomputers - Epoch AI [Link]

Can US infrastructure keep up with the AI economy? - Deloitte [Link]

2025: The State of Consumer AI - Shawn Carolan, et al., Menlo Venture [Link]

Generative AI & Gender Global Employment Trends - Linkedin Economic Graph [Link]

Agentic Misalignment: How LLMs could be insider threats - Anthropic [Link]

Researchers stress-tested 16 leading AI models from various developers and found that in certain situations, these models resorted to malicious behaviors like blackmailing officials or leaking sensitive information. These actions occurred when the models faced replacement or when their assigned goals conflicted with the company's changing direction, even when they were given only harmless business goals and explicitly told to avoid such behaviors. While no evidence of agentic misalignment has been observed in real deployments, the findings suggest caution when deploying current models in roles with minimal human oversight and access to sensitive information.

SHADE-Arena: Evaluating sabotage and monitoring in LLM agents - Anthropic [Link]

Dual Objective Setup:

- Each evaluation environment includes a clearly defined main task that simulates a real-world scenario and a secret side task that is harmful but can be plausibly hidden within the main task execution.

- The agent's challenge: complete both tasks while avoiding suspicion.

Using LLMs to assess detection, measuring task completion vs. detection success, and providing a benchmark for improving agent safety and control.

YouTube and Podcasts

Sundar Pichai: CEO of Google and Alphabet | Lex Fridman Podcast [Link]

Jared Isaacman: What went wrong at NASA | The All-In Interview [Link]

Naval Ravikant On The 4 Books That CHANGED His Life (Financially And Philosophically) [Link]

Chamath Palihapitiya: Zuckerberg, Rogan, Musk, and the Incoming “Golden Age” Under Trump - Tucker Carison [Link]

Satya Nadella on AI Agents, Rebuilding the Web, the Future of Work, and more - Rowan Cheung [Link]

Jeff Bezos: Amazon and Blue Origin | Lex Fridman Podcast - Lex Fridman [Link]

WWDC25: Platforms State of the Union - Apple [Link]

#385 Michael Dell - Founders [Link]

IPOs and SPACs are Back, Mag 7 Showdown, Zuck on Tilt, Apple's Fumble, GENIUS Act passes Senate - All-In Podcasts [Link]

I think being smart — and not being afraid to show it — means living with discomfort. It's difficult. It means being willing and able to admit when you're wrong. And it means being okay with complexity, contradiction, and uncertainty.

So, the good news: I think smart survives. It's stubborn. It's not loud. It's not flashy. But it's resilient — like a cockroach with a PhD.

And the thing about stupidity is that eventually it bumps into the hard wall of reality. When the bridges collapse and the crops fail, when the Amazon package doesn't show up because the supply chain finally imploded — suddenly people start looking around and going, "Hey, does anybody know how to fix things? Build things?"

And the guy who spent the last 20 years reading books and educating himself instead of screaming at his phone? Yeah, he's the one holding the duct tape.

It won't be sexy, and it won't be immediate. But intelligence isn't dead — it's just hungover. And sooner or later, we're going to need it to crawl out of bed, drink some black coffee, and start fixing the mess.

So at the end of the day, stupidity will always be popular — at least in the U.S. But intelligence — real, patient, compassionate intelligence — is what keeps the lights on.

And by the way, that goes for emotional intelligence too, which is every bit as important.

So if you're still here, still critically thinking, still refusing to go quietly into that great dumb night — you're already part of the resistance.

Keep going.

― The Death of Intelligence: Why Modern Society Celebrates Stupidity - The Functional Melancholic [Link]

The Obsession That Creates Enduring Companies | David Senra Interview - Invest Like The Best [Link]

Articles and Blogs

Everything Google Announced at I/O 2025 - WIRED [Link]

Launch Hugging Face Models In Colab For Faster AI Exploration - Medium [Link]

My AI Skeptic Friends Are All Nuts - Thomas Ptacek [Link]

The author argues that LLMs as agents are improving developer productivity, and suggests that while the hype around AI can be annoying, the technology's impact is real and profound. He believes that those who don't embrace AI in their coding practices will be left behind.

My Brain Finally Broke - The New Yorker [Link]

The author shares a disorienting sense of reality's erosion, attributing it to various factors, including the relentless pace of digital information, the overwhelming nature of political events, and the insidious proliferation of AI. This environment fosters a collective cognitive detachment and erosion of critical faculties, making it challenging to discern truth, engage effectively, and maintain a grounded sense of self and world.

Is there a Half-Life for the Success Rates of AI Agents? - Toby Ord [Link]

News

Meet the Foundation Models framework - Apple [Link]

The iPhone maker has launched the Foundation Models framework to allow users to run a 3B parameter model locally. The framework is part of Apple Intelligence suite and allows developers to access it using three lines of code. The model can be used to generate text, extract summaries, and tag structured information from unstructured text.

Users should be aware of strength and weakness. It's only available on Apple Intelligence-enabled devices with OS version 26+. You need to use Xcode Playgrounds to prototype with real model output. You can use Instruments profiling template to measure latency and token overhead. There is no support for fine-tuning or external model deployment

Connect Your MCP Client to the Hugging Face Hub - HuggingFace [Link]

HuggingFace releases open-source MCP server to allow accessing its tools from VSCode and Claude Desktop.

New Book List

Some book names from my daily readings recently caught my attention and might be the next book to read for me:

- How Innovation Works: And Why It Flourishes in Freedom - Matt Ridley (2021)

- The Rational Optimist: How Prosperity Evolves (P.s.)- Matt Ridley (2021)

- Incerto

- Nassim Nicholas Taleb (2021)

- Fooled by Randomness; The Black Swan; The Bed of Procrustes; Antifragile; Skin in the Game

- The Beginning of Infinity - David Deutsch (2012)

- The E-Myth Revisited: Why Most Small Businesses Don't Work and What to Do About It - Michael E. Gerber

- Start with Why: How Great Leaders Inspire Everyone to Take Action - Simon Sinek

- The Lean Startup: How Today's Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses - Eric Ries

- Grit: The Power of Passion and Perseverance - Angela Duckworth

- Mindset: The New Psychology of Success - Carol S. Dweck

- 7 Powers: The Foundations of Business Strategy - Hamilton Helmer (2016)

- The Great CEO Within: The Tactical Guide to Company Building - Matt Mochary (2019)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy Answers―Straight Talk on the Challenges of Entrepreneurship - Ben Horowitz (2014)

- Inside the Ultimate Money Mind – Robert G. Hagstrom (2023)

- The Creative Act: A Way of Being - Rick Rubin (2023)

- In the Company of Giants: Candid Conversations With the Visionaries of the Digital World - Rama Dev Jager (1998)

- The Technological Republic: Hard Power, Soft Belief, and the Future of the West - Alexander C. Karp (2025)

- The Contrarian: Peter Thiel and Silicon Valley's Pursuit of Power - Max Chafkin (2021)